处理一个 socket: too many open files 问题

2019-09-19 11:14 programming

大概情况是这样的, 前几天做完离职交接, 今天看到前公司的微信群里消息爆炸了, 用户 无法在微信小程序中下单. 接手后端项目的小胖已经重启了数据库(不晓得他为啥一进服务器 就先把数据库重启了), 说还是不行.

后来另一位同事重启了 consumer 服务, 然后服务就恢复了.

查看了 docker log, 发现了一个 ``dial tcp 127.0.0.1:8202: socket: too many open files 的问题, 但是请求的 http server 也是布署在同一台服务器上, 它们也共享了同一个 docker network, 通常是 不会出现这个问题的, 之后就猜到可能是因为本地的 TCP 端口号被占完了, 或者分配给该进程 的文件句柄数被用光了, http client 无法再发起新的 socket 连接导致的.

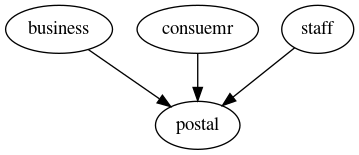

整个问题还是太清晰, 先理一下后台的服务布署情况:

其中, postal 服务提供了 GET /api/status 这个接口, 用于获取主控的状态.

该接口被 business, consumer 和 staff 使用.

在 /proc/CONSUMER_PID/fd/ 目录里面, 可以看到有数百个未关闭的 socket fd, 因为

这个服务刚被重启过, 才只剩这么多的. 同时去看了 /proc/BUSINESS_PID/fd/ 目录, 发现

里面有超过 12000 个 socket fd, 这些都是不正常的. 而 /proc/STAFF_PID/fd/ 里面只有

数百个, 因为 GET /api/status 这个接口在 staff 服务里不经常被调用.

因为是线上服务器, 可以选用访问量比较少的后台服务staff 来调试, 通过 staff 服务

请求了一下 postal 服务的 GET /api/status 接口, 发现 /proc/STAFF_PID/fd/ 里面

就多了一个 socket fd, 这种现象很稳定, 每次请求都会多一次.

问题可以大致定位到了, 估计就是 staff 里的 http client 那部分代码:

url := fmt.Sprintf("%s/api/stats/%s", settings.Current.BoxServer, box)

transport := &http.Transport{

TLSHandshakeTimeout: TlsTimeout,

}

client := http.Client{

Timeout: HttpTimeout,

Transport: transport,

}

resp, err := client.Get(url)

if err != nil {

return nil, err

}

defer resp.Body.Close()

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

return nil, err

}

上面的代码也很简单, 没有多余的操作, 而且也依照 golang docs 里面要求的 关闭了 response body.

核心的问题是在:

transport := &http.Transport{

TLSHandshakeTimeout: TlsTimeout,

}

这里, 只调整了 Transport 结构体的 TLS 握时超时的选项, 其它都是默认值.

Demo

服务端, server.go:

package main

import (

"log"

"net/http"

"github.com/gin-gonic/gin"

)

func getStatus(c *gin.Context) {

c.JSON(http.StatusOK, gin.H{

"Status": "ok",

})

}

func main() {

route := gin.Default()

api := route.Group("/api")

api.GET("/status", getStatus)

if err := route.Run("127.0.0.1:4200"); err != nil {

log.Panic(err)

}

}

客户端代码, client.go:

package main

import (

"io/ioutil"

"log"

"net/http"

"time"

)

func request() {

log.Println("request()")

url := "http://127.0.0.1:4200/api/status"

transport := &http.Transport{

// ResponseHeaderTimeout: 15 * time.Second,

}

client := http.Client{

// Timeout: 30,

Transport: transport,

}

resp, err := client.Get(url)

if err != nil {

log.Println(err)

return

}

defer resp.Body.Close()

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

log.Println(err)

return

}

log.Println(len(string(body)))

time.Sleep(1 * time.Millisecond)

}

func main() {

for i := 0; i < 10000; i ++ {

go request()

}

time.Sleep(1 * time.Hour)

}

以上的代码是在本地运行的, 同样在 client 那里可以看到打印的报错:

2019/09/19 15:26:34 Get http://127.0.0.1:4200/api/status: dial tcp 127.0.0.1:4200: socket: too many open files

然后看一下该进程打开了文件数:

$ ls /proc/5689/limits | wc -l

1024

这个值要比之前在服务器上面出现的要低很多, 后来确认是本地的linux 系统没有优化配置,

一个进程默认只允许打开 1024 个文件, 下面也可以看到这样的 resource limit 信息:

$ cat /proc/5689/limits

Limit Soft Limit Hard Limit Units

Max cpu time unlimited unlimited seconds

Max file size unlimited unlimited bytes

Max data size unlimited unlimited bytes

Max stack size 8388608 unlimited bytes

Max core file size 0 unlimited bytes

Max resident set unlimited unlimited bytes

Max processes 63754 63754 processes

Max open files 1024 1048576 files

Max locked memory 67108864 67108864 bytes

Max address space unlimited unlimited bytes

Max file locks unlimited unlimited locks

Max pending signals 63754 63754 signals

Max msgqueue size 819200 819200 bytes

Max nice priority 0 0

Max realtime priority 0 0

Max realtime timeout unlimited unlimited us

分析 Transport

以上问题的核心就是 golang 的 http client 并没有及时关闭 socket 连接导致的, 这个 要看它的 Transport 究竟怎么实现的了.

先看看 golang 的 transport 源码 (src/net/http/transport.go):

// Transport is an implementation of RoundTripper that supports HTTP,

// HTTPS, and HTTP proxies (for either HTTP or HTTPS with CONNECT).

//

// By default, Transport caches connections for future re-use.

// This may leave many open connections when accessing many hosts.

// This behavior can be managed using Transport's CloseIdleConnections method

// and the MaxIdleConnsPerHost and DisableKeepAlives fields.

//

// Transports should be reused instead of created as needed.

// Transports are safe for concurrent use by multiple goroutines.

//

// A Transport is a low-level primitive for making HTTP and HTTPS requests.

// For high-level functionality, such as cookies and redirects, see Client.

//

// Transport uses HTTP/1.1 for HTTP URLs and either HTTP/1.1 or HTTP/2

// for HTTPS URLs, depending on whether the server supports HTTP/2,

// and how the Transport is configured. The DefaultTransport supports HTTP/2.

// To explicitly enable HTTP/2 on a transport, use golang.org/x/net/http2

// and call ConfigureTransport. See the package docs for more about HTTP/2.

//

// Responses with status codes in the 1xx range are either handled

// automatically (100 expect-continue) or ignored. The one

// exception is HTTP status code 101 (Switching Protocols), which is

// considered a terminal status and returned by RoundTrip. To see the

// ignored 1xx responses, use the httptrace trace package's

// ClientTrace.Got1xxResponse.

//

// Transport only retries a request upon encountering a network error

// if the request is idempotent and either has no body or has its

// Request.GetBody defined. HTTP requests are considered idempotent if

// they have HTTP methods GET, HEAD, OPTIONS, or TRACE; or if their

// Header map contains an "Idempotency-Key" or "X-Idempotency-Key"

// entry. If the idempotency key value is an zero-length slice, the

// request is treated as idempotent but the header is not sent on the

// wire.

type Transport struct {

idleMu sync.Mutex

wantIdle bool // user has requested to close all idle conns

idleConn map[connectMethodKey][]*persistConn // most recently used at end

idleConnCh map[connectMethodKey]chan *persistConn

idleLRU connLRU

reqMu sync.Mutex

reqCanceler map[*Request]func(error)

altMu sync.Mutex // guards changing altProto only

altProto atomic.Value // of nil or map[string]RoundTripper, key is URI scheme

connCountMu sync.Mutex

connPerHostCount map[connectMethodKey]int

connPerHostAvailable map[connectMethodKey]chan struct{}

// Proxy specifies a function to return a proxy for a given

// Request. If the function returns a non-nil error, the

// request is aborted with the provided error.

//

// The proxy type is determined by the URL scheme. "http",

// "https", and "socks5" are supported. If the scheme is empty,

// "http" is assumed.

//

// If Proxy is nil or returns a nil *URL, no proxy is used.

Proxy func(*Request) (*url.URL, error)

// DialContext specifies the dial function for creating unencrypted TCP connections.

// If DialContext is nil (and the deprecated Dial below is also nil),

// then the transport dials using package net.

//

// DialContext runs concurrently with calls to RoundTrip.

// A RoundTrip call that initiates a dial may end up using

// a connection dialed previously when the earlier connection

// becomes idle before the later DialContext completes.

DialContext func(ctx context.Context, network, addr string) (net.Conn, error)

// Dial specifies the dial function for creating unencrypted TCP connections.

//

// Dial runs concurrently with calls to RoundTrip.

// A RoundTrip call that initiates a dial may end up using

// a connection dialed previously when the earlier connection

// becomes idle before the later Dial completes.

//

// Deprecated: Use DialContext instead, which allows the transport

// to cancel dials as soon as they are no longer needed.

// If both are set, DialContext takes priority.

Dial func(network, addr string) (net.Conn, error)

// DialTLS specifies an optional dial function for creating

// TLS connections for non-proxied HTTPS requests.

//

// If DialTLS is nil, Dial and TLSClientConfig are used.

//

// If DialTLS is set, the Dial hook is not used for HTTPS

// requests and the TLSClientConfig and TLSHandshakeTimeout

// are ignored. The returned net.Conn is assumed to already be

// past the TLS handshake.

DialTLS func(network, addr string) (net.Conn, error)

// TLSClientConfig specifies the TLS configuration to use with

// tls.Client.

// If nil, the default configuration is used.

// If non-nil, HTTP/2 support may not be enabled by default.

TLSClientConfig *tls.Config

// TLSHandshakeTimeout specifies the maximum amount of time waiting to

// wait for a TLS handshake. Zero means no timeout.

TLSHandshakeTimeout time.Duration

// DisableKeepAlives, if true, disables HTTP keep-alives and

// will only use the connection to the server for a single

// HTTP request.

//

// This is unrelated to the similarly named TCP keep-alives.

DisableKeepAlives bool

// DisableCompression, if true, prevents the Transport from

// requesting compression with an "Accept-Encoding: gzip"

// request header when the Request contains no existing

// Accept-Encoding value. If the Transport requests gzip on

// its own and gets a gzipped response, it's transparently

// decoded in the Response.Body. However, if the user

// explicitly requested gzip it is not automatically

// uncompressed.

DisableCompression bool

// MaxIdleConns controls the maximum number of idle (keep-alive)

// connections across all hosts. Zero means no limit.

MaxIdleConns int

// MaxIdleConnsPerHost, if non-zero, controls the maximum idle

// (keep-alive) connections to keep per-host. If zero,

// DefaultMaxIdleConnsPerHost is used.

MaxIdleConnsPerHost int

// MaxConnsPerHost optionally limits the total number of

// connections per host, including connections in the dialing,

// active, and idle states. On limit violation, dials will block.

//

// Zero means no limit.

//

// For HTTP/2, this currently only controls the number of new

// connections being created at a time, instead of the total

// number. In practice, hosts using HTTP/2 only have about one

// idle connection, though.

MaxConnsPerHost int

// IdleConnTimeout is the maximum amount of time an idle

// (keep-alive) connection will remain idle before closing

// itself.

// Zero means no limit.

IdleConnTimeout time.Duration

// ResponseHeaderTimeout, if non-zero, specifies the amount of

// time to wait for a server's response headers after fully

// writing the request (including its body, if any). This

// time does not include the time to read the response body.

ResponseHeaderTimeout time.Duration

// ExpectContinueTimeout, if non-zero, specifies the amount of

// time to wait for a server's first response headers after fully

// writing the request headers if the request has an

// "Expect: 100-continue" header. Zero means no timeout and

// causes the body to be sent immediately, without

// waiting for the server to approve.

// This time does not include the time to send the request header.

ExpectContinueTimeout time.Duration

// TLSNextProto specifies how the Transport switches to an

// alternate protocol (such as HTTP/2) after a TLS NPN/ALPN

// protocol negotiation. If Transport dials an TLS connection

// with a non-empty protocol name and TLSNextProto contains a

// map entry for that key (such as "h2"), then the func is

// called with the request's authority (such as "example.com"

// or "example.com:1234") and the TLS connection. The function

// must return a RoundTripper that then handles the request.

// If TLSNextProto is not nil, HTTP/2 support is not enabled

// automatically.

TLSNextProto map[string]func(authority string, c *tls.Conn) RoundTripper

// ProxyConnectHeader optionally specifies headers to send to

// proxies during CONNECT requests.

ProxyConnectHeader Header

// MaxResponseHeaderBytes specifies a limit on how many

// response bytes are allowed in the server's response

// header.

//

// Zero means to use a default limit.

MaxResponseHeaderBytes int64

// nextProtoOnce guards initialization of TLSNextProto and

// h2transport (via onceSetNextProtoDefaults)

nextProtoOnce sync.Once

h2transport h2Transport // non-nil if http2 wired up

}

核心的是这几句话:

// By default, Transport caches connections for future re-use.

// This may leave many open connections when accessing many hosts.

// This behavior can be managed using Transport's CloseIdleConnections method

// and the MaxIdleConnsPerHost and DisableKeepAlives fields.

//

// Transports should be reused instead of created as needed.

// Transports are safe for concurrent use by multiple goroutines.

这些话指出了我们之前代码的问题: 应该定义一个全局的 transport 结构体, 在多个 goroutine 之间共享.

改动后的 client.go 代码如下:

package main

import (

"io/ioutil"

"log"

"net/http"

"time"

)

var globalTransport *http.Transport

func init() {

globalTransport = &http.Transport{}

}

func request() {

log.Println("request()")

url := "http://127.0.0.1:4200/api/status"

client := http.Client{

// Timeout: 30,

Transport: globalTransport,

}

resp, err := client.Get(url)

if err != nil {

log.Println(err)

return

}

defer resp.Body.Close()

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

log.Println(err)

return

}

log.Println(len(string(body)))

time.Sleep(10 * time.Millisecond)

}

func main() {

for i := 0; i < 10000; i++ {

go request()

time.Sleep(1 * time.Millisecond)

}

time.Sleep(1 * time.Hour)

}

再运行测试代码, 发现它打开的 socket fd 数量恢复到个位数了, 一切正常.

$ ls /proc/19100/fd -l

total 0

lrwx------ 1 shaohua shaohua 64 Sep 19 15:56 0 -> /dev/pts/8

lrwx------ 1 shaohua shaohua 64 Sep 19 15:56 1 -> /dev/pts/8

lrwx------ 1 shaohua shaohua 64 Sep 19 15:56 2 -> /dev/pts/8

lrwx------ 1 shaohua shaohua 64 Sep 19 15:56 3 -> 'socket:[820276]'

lrwx------ 1 shaohua shaohua 64 Sep 19 15:56 4 -> 'anon_inode:[eventpoll]'

另外的问题

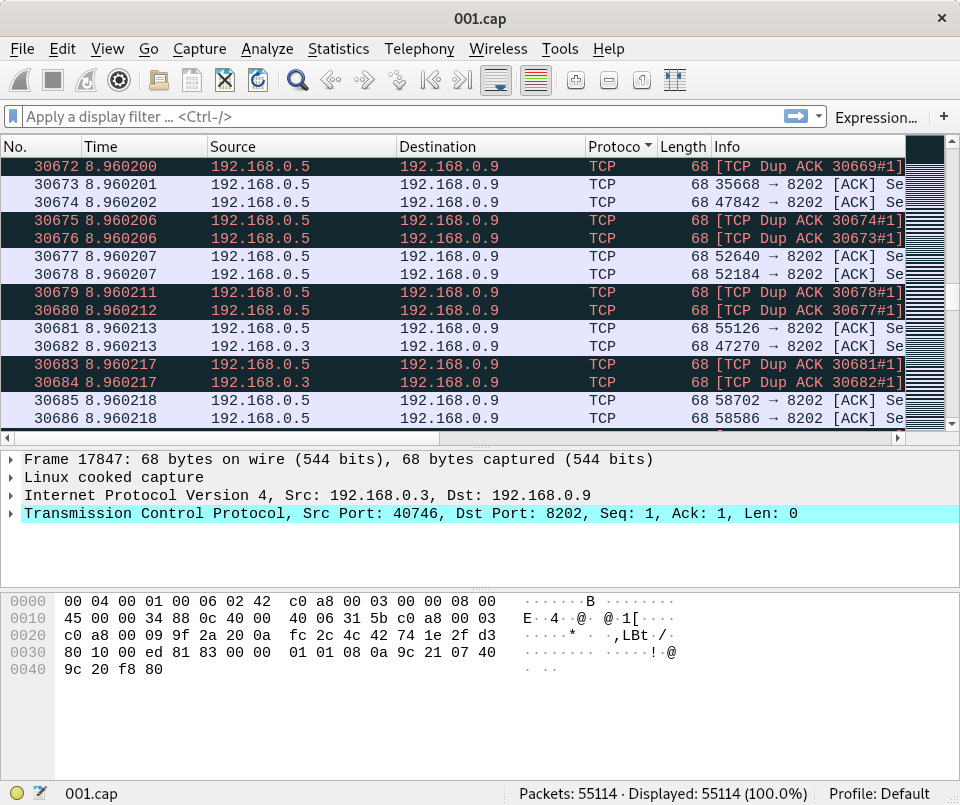

当时还用 tcpdump 抓取了 postal 服务的网络数据, 发现它里面有大量的请求在重传:

这个问题目前还没有确认出现在哪里.